Written on August 28, 2024

In 2019 is when my journey down the rabbithole of data leakage really got its start. I was

working on several machine learning projects at a non-profit startup focused on detecting

“early signals” in healthcare datasets. For example, predicting patient dropout during the

course of clinical treatment or classifying mothers at risk of early-term pregnancy.

Read More

Written on July 25, 2024

Segment Anything

Are you a purist at heart?

Read More

Written on February 13, 2020

Just some thoughts on what a DS-enabled environment should look like,

at least from the perspective of needs I’ve had and projects I’ve worked on. The

needs are staged in orders of approximation of what my ideal would be:

- 1st Order: have access to a higher-powered machine than a laptop

- 2nd Order: having access to multiple higher-powered machines that fit different needs

- 3rd Order: having access to multiple machines from the same central location

Read More

Written on January 27, 2020

In this lesson, we focus more on the operational aspects of deploying a

edge app: we dive deeper into analying video streams, partcicularly those

streaming from a web cam; we dig into the pre-trained landscape, learning

to stack models into complex, beautiful pipelines; and we learn about

a low-resource telemetry transport protocol, MQTT, which we employ

to send image and video statistics to a server.

Read More

Written on January 17, 2020

In this lesson, we go over the basics of the Inference Engine (IE), what devices

are supported, how to feed an Intermediate Representation (IR) to the IE, how

make inference requests, how to handle IE output, and how to integrate this all

into an app.

Read More

Written on January 12, 2020

This lesson starts off describing what the Model Optimizer is, which feels

redundant at this point, but here goes: the model optimizer is used to (i)

convert deep learning models from various frameworks (TensorFlow, Caffe, MXNet, Kaldi,

and ONNX, which can support PyTorch and Apple ML models) into a standarard

vernacular called the Intermediate Representation (IR), and

(ii) optimize various aspects of the model, such as size and computational

efficiency by using lower precision, discarding layers only needed during

training (e.g., a DropOut layer), and merging layers that can be computed

as a single layer (e.g., a multiplication, convolution, and addition can

all be merged). The OpenVINO suite actually performs hardware optimizations

as well, but this aspect is owed to the Inference Engine.

Read More

Written on December 31, 2019

In this part of the course, we go over various computer vision models, specifically

focusing on pre-trained models from the Open Model Zoo available for use with

OpenVINO. We think about how a pre-trained model, or a pipeline of them, can

aid in designing an app – and how we might deploy that app.

Read More

Written on December 30, 2019

My ultimate goal is to get OpenVINO working with my Neural Compute Stick 2 (NCS2). This is

a little trickier than getting OpenVINO working on my MacOS, primarily because you need Windows,

Linux, or Rasbian to use with the NCS2. This is despite the OpenVINO Installation Guide for MacOS ending

with a small note on hooking up the Mac with NCS2. At the time of writing, that note basically just

says, “You’ll need to brew install libusb.” Nothing more.

Read More

Written on December 27, 2019

When first considering installing Intel’s OpenVINO on my MacBook in

my last post, I quickly determined

that I did not meet the minimum specs required for running Intel’s OpenVINO… Or so

I thought.

Read More

Written on December 20, 2019

Oftentimes when working with a database, it is convenient to simply connect to it

via Python’s Pandas interface (read_sql_query) and a connection established

through SQLAlchemy’s create_engine – then, for small enough data sets, just query

all the relevant data right into a DataFrame and fudge around using Pandas lingo from there (e.g.,

df.groupby('var1')['var2'].sum()). However, as I’ve covered in the past (e.g., in Running with Redshift and Conditional Aggregation in {dplyr} and Redshift),

it’s often not possible to bring very large data sets onto your laptop – you must do

as much in-database aggregation and manipulation as possible. It’s generally good

to know how to do both, though obviously since straight-up SQL skills covers both

scenarios, that’s the more important one to master in general.

Read More

Written on December 20, 2019

The term “edge” is funny in a way because it’s literally defined as “local computing”, or

“computing done nearby, not in the cloud.” 10 years ago this was just called “doing computer

stuff.”

Read More

Written on December 16, 2019

If you’re just starting out in data science, machine learning, deep learning (DL), etc, then I can’t recommend Udacity

enough. Years ago, after I graduated with my PhD in physics, I wanted to get in on AI research in industry, say at

Google or Facebook. As a stepping stone, I took a job as a data scientist at WWE developing predictive models

for various types of customer behaviors on the WWE network. Early on at this job, I serendipitously stumbled upon and

enrolled in Udacity’s first offering of their DL nanodegree.

Read More

Written on November 27, 2019

Read More

Written on November 27, 2019

At work we have this predictive modeling project that has taken on multiple iterations due to the project

starting and stopping over time, and different individuals taking it on after others

had moved on to other things.

Read More

Written on November 21, 2019

A lot of my job is working with wearables in one way or another: wearing them, reading about them,

using their data. Sometimes I can lose sight of what’s going on though while I get lost in the

particulars of a specific project, or the mundanities of the work week. So, in an attempt to

keep current, I present to you (me) – Wearables Weekly.

Read More

Written on November 11, 2019

Some notes and anecdotes about my experience at the Ai4 Healthcare conference in NYC, Nov 11-12.

Read More

Written on November 8, 2019

Let me just start out by saying: I’m proud of this title! Oftentimes I get a little lazy with

the titles. Other times I think a boring, straightforward title is simply the way to go. But not

this time! No, this time I let the morning coffee do the talking. (Thanks, morning coffee!)

Read More

Written on October 29, 2019

I’ve been watching through the video lectures of the “Deep Learning School 2016” playlist on Lex Fridman’s YouTube

account. While doing so, I found it useful to collect and collate all the references in each lecture (or as many

as I could distinguish and find).

Read More

Written on October 18, 2019

When someone says they have AWS skills, what do they mean?

Read More

Written on September 25, 2019

For certain types of data, random forests work the best. Maybe more accurately, I should say a

tree-based modeling approach works best – because sometimes the RF is beat out by gradient boosted

trees, and so on. That said, I’ve been kind of obsessively vetting random forests as of late, so

this is in that vein.

Read More

Written on September 13, 2019

Today, I’m reading through 2009’s

Variable Importance Assessment in Regression: Linear Regression versus Random Forest

(at the time of this writing, academia.edu had a pdf of this paper)

In what follows are quotes and notes.

Read More

Written on September 13, 2019

I’ve been playing with random forests, experimenting with hyperparameters, and throwing them at all kinds of

datasets to test their limitations… But prior to this month, I’d never used a random forest for any kind of time

series analysis or forecasting. In a conversation with my brother about recurrent neural nets, I began to

wonder if you can get any traction out of a random forest.

Read More

Written on August 9, 2019

Strap a wearable on your wrist. Your other wrist. Your finger. Transmit the data through

a pipeline ending in an S3 bucket on AWS. Now, SSH into an GPU-powered EC2 instance because

we’re about to flex the power of a recurrent + convolutional deep neural network hybrid on

this sucka.

Read More

Written on June 26, 2019

Data leakage and illegitimacy can creep up from any number of places along a typical machine learning

pipeline and/or work flow.

Read More

Written on June 21, 2019

So you created a random forest, and it seems like its making great predictions! But now your

boss wants to know why. This is where things can get tricky.

Read More

Written on May 30, 2019

A while back, I wrote about CookieCutter Data Science, which

a project templating scheme for homogenizing data science projects. The data science cookiecutter was a great idea, I think,

and my team uses it for all our projects at work. The

key is in encouraging/enforcing a certain level of standards and structure. It takes a little cognitive load to

take on the data science cookiecutter your first time, but ever thereafter it will lighten the cognitive load for

you and your team. This is essential when working on multiple projects at once, leaving projects idle for weeks to

months at a time, and frequently swapping projects with others on the team.

Read More

Written on May 29, 2019

A little while back, I bought a new,

ergonomic keyboard called the Kinesis Freestyle2. In tandem, I installed Karabiner so that I can

really customize the keyboard. Ultimately, I had found a great set up where my hands could relax

on the keys all day – even had the mouse motion and clicks mapped!

Read More

Written on May 6, 2019

In the first post

in this series, we covered memory strides for default NumPy arrays – or, more generally, for

C-like, “row major” arrays. In the second post,

we showed that a NumPy array can also be F-like (column

major). More importantly, for those who like to switch back and forth between Pandas and NumPy, we found that

a typical DataFrame is F-like (not always, but often – and in important cases). We also found

that if one builds a windowing function based on NumPy’s as_strided assuming a C-like array,

but instead uses F-like arrays in production, one shall be screwed.

Read More

Written on May 6, 2019

In the previous post, we ignored the existence of Pandas and did things in pure NumPy. There was a

really important reason for this: Pandas DataFrames are not stored in memory the same as default NumPy

arrays.

Read More

Written on May 3, 2019

Let’s say you have time series data, and you need to cut it up into small, overlapping

windows. In the past, I’ve done this for spectral analysis (e.g., short-time Fourier transform),

and more recently when working with recurrent neural networks.

Read More

Written on April 22, 2019

As I’ve mentioned in previous posts, many of the references one will encounter when looking

up methods for dealing with missing values will be oriented towards statistical inference

and obtaining ubiased estimates of population parameters, such as means, variances, and

covariances. The most mentioned of these techniques is multiple imputation. I saw value in

digging deeper into this area in general, despite it not being optimized reading for

developing predictive models – especially those that might run in real time in an app or at a

clinic of some sort. The reason is that, in tandem with developing a great predictive model,

I generally like to develop corresponding models that focus on interpretability. This allows me

to learn from both inferential and predictive approaches, and to deploy the predictive model while

using the interpretable model to help explain and understand the predictions. However, once one

becomes interested in interpretability, one becomes interested in inference – or, importantly,

unbiased estimates of population parameters, etc. That is, I’m actually very interested in

unbiased estimates of means, variances, and covariances – but in parallel to prediction, not

in place of it.

Read More

Written on April 16, 2019

There will be some overlap in the subsections. That’s ok. I’m just trying in general to

come up with some lessons learned over the past ~13 years or so.

Read More

Written on April 9, 2019

My thumb joints have been bothering me… And my wrists. Turns out, I’m probably plugging away

at the keyboard way too much, teaching machines to learn and whatnot.

Read More

Written on April 3, 2019

It’s been over a month since I was doing a Neo4j-oriented project. On another project today, some set data

was provided to me in a CSV file. The goal was to come up with a decent visualization

of all the sets and their intersections… I thought it might be helpful to a graph visualization, then

realized it would be a perfect excuse to make sure I’m not getting rusty with Neo4j and Cypher.

Read More

Written on March 29, 2019

Ok, so it’s been about a week of reading, thinking, toying around… My original objective was

looking into various ways to treat missing values in categorical variables with an eye towards

deploying the final predictive model. Reading over several ideas that included continuous

variables (e.g., Perlich’s missing indicator and clipping techniques), I’ve re-scoped it a bit

to missing values in general, specifically for predictive models.

Read More

Written on March 25, 2019

If you begin to search the web for info imputing categorical variables, you will find a lot of

great information – but most of it is not in the context of deploying a real-time prediction model†.

Read More

Written on March 23, 2019

This week’s content got a little more into actual machine learning models, namely

simple multiperceptron-style networks – i.e., going from a linear regression to a

network with hidden layers and non-identity activation functions. Instead of using

MNIST as a starting point, the course creators buck that trend and dive into Fashion

MNIST. Very briefly, the fact that implicit biases may be inherent in a data set is mentioned,

and it is pointed out that such biases can unknowingly leak into machine learning models

and cause downstream issues. However, most of this content was optional: one either explores

the provided reference and follows down the rabbit hole via references therein, or not. The

week’s quiz doesn’t even mention it. My 2 cents: take the detour, at least briefly.

Read More

Written on March 19, 2019

Way back when circa 2012 I was heavily into R and got introduced to ProjectTemplate, which is

an R package that allows you to begin data science projects in a similar way – with a similar

directory structure. It was very useful, and navigating projects became intuitive. Again,

when digging a bit into single-page web apps, I was introduced to this

idea of maintaining a level of homogeneity in the file/folder structure across projects. And from my limited forays

into Django and Flask, I know a similar philosophy is adopted. But what about various data engineering

and data science projects primarily developed in Python. For my

Python-oriented projects at WWE, I kind of came up with my own ideas on how

each project should be structured…but this changed over time as I worked on many distinct, but related

projects. Part of this leaks into the concept of how Git repos should be organized (subtrees? submodules?)

for similar projects, but in this post

I’ll stick with just deciding on a good project structure.

Read More

Written on March 14, 2019

Haven’t used LaTeX much since I finished my PhD dissertation. I did try using it when I first

began at WWE to write up mini papers on the projects we were working on… My boss said, “This is

nice, but unnecessary.” Haha – and that was that.

Read More

Written on March 14, 2019

One: this is called “Part 1 of N” b/c I know I’ll be posting more on this, and don’t want to have to

come up w/ crazy, catchy titles for each, and I don’t know how many there will be! Two: on a side note,

I originally dated this file as 2018 instead of 2019, which I’ve done for almost every 2019 post so far. It’s

2019, Kev! Get with it! Three: it’s Pi Day. I love math and physics so much, but Pi Day? Meh.

Read More

Written on March 6, 2019

Just started working on a new-to-me TensorFlow-oriented project at work. The project is

dusty, having been on the shelf for a year or so. I’m also dusty having been working on

other, non-TF-y things for the past 6 months. For the past few days, I’ve waded through

another man’s Python code, editing, googling, and finally getting things to run – and that’s

when I signed onto LinkedIn and saw Andrew Ng’s post about this new course.

Read More

Written on February 28, 2019

Straight to the point: working on a new project and needed to start using AWS CLI again. Figured

I’d write down a few useful commands as I go.

Read More

Written on February 20, 2019

There is a lot to love about Neo4j, especially if you are modeling data that has a high relationship density

and highly heterogenous relationship types, patterns, and structures.

Read More

Written on February 19, 2019

When I first began working at WWE, my only SQL experience was through online courses, tinkering with SQLite,

and using sample datasets to solve toy problems. My world was about to change.

Read More

Written on February 14, 2019

I’m in a room filled with impatient, frustrated citizens awaiting

their civic fate: to be called for jury duty, or not?! People are chortling maniacally at their

own jokes (“I should be honored to be here – chortle, chortle.”)

Read More

Written on February 12, 2019

Learning about a lot of different wearables and home sensors these days. Notes and links clutter

my screen, sprinkled across a host of applications. Scribblings of tables and graphs charting out the

landscape lay scattershot atop my desk, alongside printouts and to-do lists…at work…at home…and in

my bookbag. Madness and mental mutiny is just around the corner!

Read More

Written on February 5, 2019

Here’s a weird thing: I could have sworn I wrote a post about this already, and that I called it the same: “Accuracy is

not so Accurate.” But I’ve looked and looked: it’s not on my blog, it’s not in my email, it’s not…well, actually

I gave up looking after that. But it probably would have not been in the next spot either! Like many of my writings, it

likely exists somewhere – but out there in a nebulous quantum state, or somewhere in the aether.

Read More

Written on January 25, 2019

Wright [1921] and Neyman [1923] were early pioneers of causal modeling, though the field did not

fully mature until the 1970s with Rubin’s causal model (Rubin [1974]). In the 1980s, Greenland and Robins [1986]

introduced causal diagrams, Rosenbaum and Rubin [1983] introduced propensity scores, and Robins [1986] introduced

time-dependent confounding (followed up in Robins [1997]). Then there the later work on causal diagrams by

Pearl [2000] (side note: Pearl is from NJIT!). Post-2000, there are the methods of optimal dynamic treatment

strategies (Murphy [2003]; Robins [2004]) and a machine learning approach called targeted learning (van der Laan [2009]).

These methods are primarily for observational studies and natural experiments – things as they are in the world. The ideal

is to use randomized control… But you just cannot do that for many things (think weather, astronomy, disease, etc).

This post is based on watching through the first week’s lectures from Coursera’s Crash Course in Causality. Stay

tuned for more…

Read More

Written on January 21, 2019

This is just a “I’ll thank me later” post.

Read More

Written on December 18, 2018

Machine learning models are great, right?!

Read More

Written on November 28, 2018

I was going through the Cypher Manaul today, and

started playing around with EXPLAIN and PROFILE to learn more about how Neo4j formulates execution

plans. The most important piece of advice to extract is: Do not be lazy while writing your queries!

Read More

Written on November 21, 2018

What’s cool about Neo4j is that you can output the data almost any way you want: you can return

nodes (which are basically shallow JSON documents), or anything from flat tables to full-on JSON document

structures.

Read More

Written on November 20, 2018

Have I ever mentioned that I’ve given up trying to be creative with post names?

Read More

Written on November 15, 2018

Not going to lie to you: this post is not any better than googling “cypher syntax highlighting for vim.” In fact,

that might be a better thing for you to do! But since you’re already here–

Read More

Written on November 13, 2018

Neo4j is awesome for working with relationship-heavy data – things that might be considered JOIN nightmares

in a relational DB. For example, say you have designed a knowledge graph that maps lab tests to disease states,

where a pathway might look like:

Read More

Written on October 17, 2018

At work, we want to have a database detailing various wearables devices and home sensors. In this database,

we would want to track things like the device’s name, its creator/manufacturer, what sensors it has, what

data streams it provides (raw sensor data? derived data products? both?), what biological quantities it

purports to measure (e.g., heart rate, heart rate variability, electrodermal activity), whether or not its

claims have been verified/validated/tested, whether or not its been used in published articles, and so on. For

much of this, a standard relational database would be just fine (though there can be some tree-like or recursive

relationships that begin to crop up when mapping raw sensor data to derived data streams).

Read More

Written on October 16, 2018

At my last job, my main cloud was a Linux EC2 instance – there, I used Python and Cron to automated almost everything

(data ETL, error logs, email notifications, etc). The company at large commanded many other cloud features, like Redshift and S3,

but I was not directly responsible for setting up and maintaining those things: they were merely drop-off and pick-up locations

my scripts traversed in their pursuit of data collection, transformation, and analysis. So, while it was all cloud heavy,

it felt fairly grounded and classical (e.g., use a server to execute scripts on a schedule).

Read More

Written on October 5, 2018

In a previous post, we used the mighty pen (remember: fuck pencils) and rock-crushing paper to model

data using entity relationship diagrams (ERDs). Today, we will use a pencil… Just kidding: today, we

will use the pen-and-paper approach to model our data with graphs. Importantly, we will discuss how

to translate between ERD and graph models.

Read More

Written on October 1, 2018

There are a lot of options out there for software to design these diagrams in, and I’ll get

to using MySQL Workbench in another post (or who knows: maybe at the end of this post). But right

now, I want to emphasize the good ol’ fashioned pen-and-paper approach (pen, b/c fuck pencils).

Read More

Written on September 26, 2018

At work, we have data assets – both potential and in-hand – and a set of business interests and use

cases for these assets. How complex is this data? How much of it do we have, or will we potentially

manage in the future? What is the best way to model and store this data? These are the types of

questions that I’m now confronted with.

Read More

Written on May 15, 2018

Recently, the Selenium component of my Facebook Graph code broke. This is important b/c we need Facebook data for during

and after live events streamed on Facebook, as well as collecting insights data from 400+ Facebook Pages at a regular,

daily cadence.

Read More

Written on May 8, 2018

In the last article on the Instagram graph, I covered some fields and edges on the Instagram

Account, Story, and Media nodes. In this post, I talk a little bit about the available metrics

on the Instagraph.

Read More

Written on May 1, 2018

If you working for a media juggernaut, the Facebook Graph API is helpful in collecting engagement/consumption data on

Facebook pages, posts, videos, and so on. Of course, its utility is based on how well you can automate the process of

obtaining a user token and swapping that user token for a page token (or 100’s of page tokens!). With a page token,

obtaining data is as simple (strategizing how to best collect, clean up, and store all the data is another story). Just

issue a GET request: this can be done in the browser, using a Python package like requests, and so on.

Read More

Written on April 12, 2018

Long story short: in Redshift at work, the default when you create a table is that the

table is private to you unless you decide to grant permissions to other users. However,

for those of us using Hive, this is not the case: instead, we all use the same user account,

which has damn-nigh admin privileges, and we store the external table data in an S3 bucket

that is accessible for reading/writing by a much larger, extended team. Why such sophisticated

user and group permissions in Redshift, but not in S3 or Hive? My goal is to figure out

how to develop more granular permissions for S3 and Hive users such that the defaults are

more like we have in Redshift (and ultimately make the case to our AWS admins/overlords).

Read More

Written on April 10, 2018

Recently, I’ve been adminstering a Linux server for some folks on the Content

Analytics and Digital Analytics teams. With this great power comes–you guessed it–great

responsibility. And one such responsibility is ensuring that the system’s resources

are available and in good use. A few of our team members are developing Selenium

scripts, which employ a Chrome webdriver and Xvfb virtual display, both of which seem

to have a problem of hanging around long after they’ve stopped being used. This seems

to happen when a function or script someone wrote crashes, or if they don’t properly

close things (e.g., display.stop(); driver.quit()). I mean, that’s somewhat of a

hypothesis on my part – the main point is that these processes build up into the 100’s

and just hang around.

Read More

Written on April 10, 2018

How many views did our live stream get on Facebook? How about YouTube and Twitter, or on our

mobile app, website, and monthly subscription network? How do these numbers compare to each other

and to similar events we streamed in the past? And, finally, how do we best distribute this information

to stakeholders and decision makers in near-real time?

Read More

Written on March 28, 2018

Imagine that the Marketing Team at your company is designing an email campaign to ~1-2 million customers. Their goals

are to increase customer retention and decrease subscription cancellation. Your data science team has been

asked to help Marketing achieve these goals and, importantly, to quantify that success. How would you do this?

Read More

Written on March 26, 2018

About 2 weeks ago I returned to an old Selenium scraping project on my MacBook Pro, only to find errors strewn across

the screen like the dead bodies of a recent war my script did not win… The solution turned out to be updating the

chromedriver. Last week, the same thing happened to my coworker’s Selenium scripts on Windows. He independently

arrived at the same conclusion and solution, which he brought up this morning, wondering aloud if we need to check

the chromedriver on our Linux server. I had already been monitoring the situation: for whatever reason, the few daily automated

scripts had not crashed, and in fact are still working as I write this. However! (Dramatic suspense: see next paragraph.)

Read More

Written on March 22, 2018

First things first: update and upgrade your system software.

Read More

Written on March 16, 2018

There are no DOM or window objects in Node; no webpage you are working with! The essence of Node is

“JavaScript for other things.” Get out of the browser and onto the server!

Read More

Written on March 15, 2018

To best understand JavaScript promises, one should first understand what a “callback” is,

and also what it is to be in “callback hell.”

Read More

Written on March 6, 2018

After finishing up

the course on JavaScript and the DOM

from Udacity, I figured I’d get back on the main track of the web development course. It was refreshing to

get a sense of how much I had learned from doing these side courses…and yet I still could not understand

the solution to one of the service worker quizzes. Like, at all.

Read More

Written on February 25, 2018

This is just a public service anouncement. If you are using SQLAlchemy to connect to Redshift and you

issue a LIKE statement using the % wildcard, you will confront some difficulty. This is because the % symbol

is special to both SQLAlchemy (escape symbol) and Redshift’s LIKE statement (wildcard). In short, your

LIKE statements should look more like this:

con.execute("""

SELECT * FROM table WHERE someVar LIKE '%%wtf%%'

""")

Read More

Written on February 23, 2018

I have no idea why, but yesterday when I tried opening VirtualBox to play around, my MacBook Pro

told me that it failed to open. Huh? Whatever, let me reboot and try again… Failed to open! Ok, ok – let

me google it this. What do I write? “VirtualBox won’t open.” Too general… Oh, I know! Try opening it

in Terminal.

Read More

Written on February 20, 2018

Read More

Written on February 16, 2018

I have literally rebelled against using diff and git diff forever. Thought it all

looked intimidating a long time ago, and learned to cope without them. By cope, I mean,

I’ve survived diff’n files the brutish, caveman way line-by-line. The reality is,

before this past year, it wasn’t even a huge concern: I worked on a few, mostly one-off coding/research efforts

at a time. But nowadays? I’m constantly switching between projects at work, and working on

new ones that are extremely similar to old ones. This means, I have a lot more need for

organization, intelligent reuse of code, and the ability to quickly determine changes I made to

projects I worked on last week, or last month, or just 1 of the 3 I worked on yesterday.

Read More

Written on February 15, 2018

Time and again in my various data collection and reporting automations, I use similar functions

to connect to Redshift or S3, or to make a request from the YouTube Data API or Facebook Graph. I keep these automations

in a single repository with subdirectories largely based on platform (e.g., youtube, facebook, instagram, etc). Within

each subdirectory, projects are further organized by cadence (e.g., hourly or daily) or some other defining

characteristic (e.g., majorEvents). The quickest/dirtiest way to re-use a function I’ve already developed in another project

is to copy-and-paste the function into a script in the current project’s directory… But then something changes:

maybe it’s an update to the API, or I figure out a more efficient way of doing something. Or maybe I just develop a few

extra related functions each time I work on a new project, and eventually start forgetting what I’ve already created

and which project it’s housed under.

Read More

Written on February 14, 2018

Our ultimate goal is to treat the Page Node as the root node of a graph, and to strategize

how to traverse that graph and what to collect along the way.

Read More

Written on February 13, 2018

What if you work on a Windows machine, but actively develop Python scripts that run on Linux Ubuntu? Installing

a Linux virtual machine on your computer might sound pretty good! However, this isn’t my problem: I work on a

MacBook Pro and rarely have an issue switching scripts between the two OS’s. So my problem is that I don’t have

a problem, but still think the idea of running a Linux Ubuntu virtual machine on a non-Linux Ubuntu computer is cool AF.

Read More

Written on February 9, 2018

Let’s say we have two Git repositories: team_automations and side_project. The team_automations repository houses many

of our more recent, more maturely designed and versioned automation projects, while the side_project repository

contains one of our older, more scrappy automations. The more recent projects are all housed together, but respect an

easy-to-understand directory structure. This layout and its corresponding best (better?) practices weren’t obvious to us when we

were working on side_project (or on other_side_project and experimental_project, for that matter). But over time, we’ve

gained experience with project organization, Git version control, and maintaining our own sanity. For a long time, we’ve respected

the “if it ain’t broken, don’t fix it” rule, but our idealist tendencies are overcoming our fear (and possibly our wisdom). Our goal

is to merge side_project with team_automations, while minimizing how many things we break, bugs we introduce, and hopefully

maintaining Git histories across both repositories.

Read More

Written on February 8, 2018

In the previous post, the

Page/Albums Edge allowed us to

get a list of all Album nodes associated with a given

Page Node. From this, we

created a simple page_id/album_id mapping table (called page_album_map). Now, if your only

working with a single Facebook Page, then this table might

seem kind of silly. But! The second you begin working on a second Facebook Page,

it starts to take on some meaning. If you work for something like a record label, publishing company, or sports empire,

then it’s likely you’re tracking tens to hundreds of Facebook Pages, easy. No question here: the mapping

table becomes pretty dang important!

Read More

Written on February 8, 2018

Let’s say you have a server: it’s yours and yours only. Your life’s work is on

it. Now let’s make it interesting: say that, suddenly, other people were given

the same credentials to log in and out.

Read More

Written on February 7, 2018

So – what are some tables I can create based on the user engagement with a

Page Node that I can collect on

the daily?

Read More

Written on February 2, 2018

I hack together HTML reports and HTML emails, but some parts of the web development class and

the intro-level JavaScript courses made me ask, “But do I really know WTF I’m doing?”

Read More

Written on February 1, 2018

First, let’s make the code blocks a bit friendlier looking: some definitions!

Read More

Written on January 30, 2018

con = connect_to_hive()

ex = con.execute

from pandas import read_sql_query as qry

Read More

Written on January 29, 2018

Last year I developed a lot Selenium/BeautifulSoup scripts in Python to scrape various social media

and data collection platforms. It was a lot of fun, and certainly impressive (try showing

someone how your program opens up a web browser, signs in to an account, navigates to various pages, clicks on buttons,

and scrolls aroun without impressing them!).

Read More

Written on January 28, 2018

I resisted using Selenium to acquire the user token. There just has to be a way to

use requests and the Graph API, right? I don’t know. Maybe. There are some promising

avenues, but all amounting to quite a bit of work and education!

Read More

Written on January 26, 2018

Using the YouTube Reporting API several months ago, I “turned on” any and every daily

data report available. That’s a lot of damn data. Putting it into Redshift would be a headache,

so our team decided to keep it in S3 and finally give Hive and/or Presto a shot.

Read More

Written on January 26, 2018

Some of the JavaScript being thrown around in the web development course is actually a little more complex

than I anticipated… So I figured it might be a good time to review some JavaScript basics by taking Udacity’s

Intro to JavaScript course. In this post,

I summarize my course notes.

Read More

Written on January 23, 2018

Over the past several months, I’ve been on several reconnaissance missions to uncover

what data is available from Facebook, how one might get it, and whether it can be

automated.

Read More

Written on January 22, 2018

At work, I have a particular python Selenium script that is scheduled in Crontab

to scrape some data every two hours. Little did I know that somehow, at one point,

the code broke… Assuming everything was working, I wondered:

Why the hell are there so many chromedriver processes hanging around in the process list?

Read More

Written on January 17, 2018

Reader beware: this post isn’t about whether or not I like consuming content

on Facebook’s new Watch

platform. Instead, it’s more of a follow-up to my exploration of using the Graph API

to keep a pulse on live video streams.

Read More

Written on January 17, 2018

In a previous post,

I showed how to overlay a bar graph in a column of data using the

styles.bar() method of a pandas DataFrame, which is a nice feature when coding up

automated emails reports on whatever-metric-your-company-is-interested-in.

Read More

Written on January 17, 2018

While trying to figure out how to ensure that the HTML report’s numeric values

had commas is select columns, I stumbled across a neat little tidbit in the

documentation for styles.set_table_style() that gives the email an interactive

vibe: highlight the row that the cursor is resting on.

Read More

Written on January 12, 2018

The Issue: When I SSH into my AWS/EC2 instance at work and romp around in iPython, the command line completion

functionality works just fine… That is, until I import pandas and dare hit the tab key! Then it pukes

its guts out and dies.

Read More

Written on January 12, 2018

A few months back I saw that Google was offering scholarships

for a mobile web development nanodegree they sponsor on Udacity. Seemed a little outside of my day-to-day,

but well within my span of interest. Mostly, it just seemed too cool to pass up, and without

any real risk. Only the potential for growth and opportunity!

Read More

Written on January 11, 2018

Sometimes you need to run a script that takes long enough to warrant web surfing, but

not long enough to really get too involved in another task. Problem is, sometimes

the script completes and you’re still reading about whether or not Harry Potter

should have ended up with Hermione instead of Ron. (Good arguments on both sides,

I must say!)

Anyway, point is, it would be nice to have some kind of notification that the script

completed its task. It’s actually pretty simple!

python myScript.py && echo -e "\a"

On this StackExchange page,

some people said they had issues with this when remotely logged in via SSH. I did not have this

problem when remotely logged into my EC2 instance on AWS, but in case you do, the recommendation is

to “redirect the output to any of the TTY devices (ideally one that is unused).”

./someOtherScript && echo -en "\a" > /dev/tty5

Read More

Written on January 9, 2018

Just going to leave this here for future reference!

Read More

Written on January 4, 2018

This is a quickie, but a goodie! Let’s say during an ETL process, you only want to run a second

script if a first one completes successfully. For example, say the first script uses an API to

extract some cumulative data from a social media site (e.g., views, likes, whatever), transforms it into some

tabular form, and loads it into S3 on a daily basis; and say the second script computes deltas on this

data to derive a daily top 10 table to be used by some interested end user in Redshift (e.g., daily top 10 viewed

YouTube videos, daily top 10 most-liked posts, daily top 10 whatever).

Read More

Written on January 3, 2018

So you have a personal AWS account, do ya?! And you’ve used the boto3 python package to transfer files

from your laptop to S3, you say?!

Read More

Written on December 22, 2017

So you use crontab to automate some data collection for one of some big media company’s many

YouTube channels. They think it’s awesome: can you do it for all the channels?

Read More

Written on December 18, 2017

So you’ve pip-installed boto3 and want to connect to S3. Should you create an S3 resource or an S3 client?

Read More

Written on December 17, 2017

In this post, we cover how to measure the performance of a video posted to a Facebook Page. We explore how to automatically

identify any live broadcasts, obtain their video ID, and track their performance.

Read More

Written on December 12, 2017

If you are a content creator/owner on YouTube, the Reporting API will provide you with a horde of data. You just

have to wait about two days for it.

Read More

Written on December 7, 2017

Let’s say you want to automate some HTML reports to be emailed daily. The reports should

have a consistent look, but clearly need to be dynamically generated to take into account

newly collected data and observations. This little tidbit should get you started!

Read More

Written on December 1, 2017

At work, I’m been diving deep on everything and anything Facebook:

- What is the Open Graph Protocol?

- How do I use the Graph API?

- What data can we get from Facebook Insights or Analytics?

- How about Audience Insights? Automated Insights? Facebook IQ?

Read More

Written on November 16, 2017

I’m currently following along with Lynda’s

“Learning Flask,” which

requires one to use some Postgres.

Read More

Written on November 9, 2017

Been cleaning up my work email and found these notes on installing CUDA on an EC2 instance

from back in April. Figured some of it could potentially come in handy one day.

Read More

Written on November 7, 2017

We are starting to store some of our data in Hive tables, which we have access to via a Hive or Presto

connection. To dive right in, I turned to a few short courses on Lynda.com. In this log, I document

a few things from the course

Analyzing Big Data with Hive.

Read More

Written on November 3, 2017

In Lynda’s course,

“Programming Foundations: Design Patterns,”

we covered the following patterns:

- Strategy

- Observer

- Decorator

- Singleton

- Iterator

- Factory

Read More

Written on November 1, 2017

So you have a pandas DataFrame. Great!

Read More

Written on November 1, 2017

For several days, I’ve spent my morning watching lynda.com’s

“Python Parallel Programming Solutions”

course in an attempt to better understand threading and multiprocessing, and how they are implemented in

Python.

Read More

Written on October 27, 2017

In a previous post,

I covered a little bit about accessing FaceBook’s Graph API through the

browser-based Graph Explorer tool, as well as how to access using the facebook Python

module. Though the facebook module made things appear straightforward, it did seem to have some

setbacks (e.g., it only supported up to version 2.7 of the API).

Read More

Written on October 26, 2017

The function below captures bits and pieces of the things that I’ve learend

over the past several days. It is usable, for sure! But might not be ideal, e.g.,

I’m working on an object version of it that provides a little more flexibility (no

need to specify everything up front!). Also, there seem to be better ways to

include tables in an HTML email (e.g., pandas). That said, here is the state

of my art at this time. It certainly puts together a lot of pieces that

you can take or leave in your own code (attaching images, using inline images,

including data tables, etc).

Read More

Written on October 26, 2017

Automation: it’s what I do best.

Read More

Written on October 18, 2017

Here’s the gist: by default, the Python interpreter is single-threaded (i.e., a serial processor). This is

technically a safety feature known as the Global Interpreter Lock (GIL): by maintaining

a single thread, Python avoids conflict. Each computation waits in line to take its turn. Nobody cuts

the line. There is no name calling, spitting, or all-out brawls when things are taking too long. Everyone’s friendly,

but no one is happy!

Read More

Written on October 17, 2017

Here’s the situation:

- I have a selenium script that scrapes and collates data from a javascript-heavy target source

- There are multiple target sources of interest

- The data collection from each source should happen at about the same time

- e.g., more-or-less within same ~20 minute span is good enough

- If each source scrape were quick, one might target them in sequence, however this is not the case!

- Several of the targets are large and take way too much time to meet the “quasi-simultaneous” requirement

Some solutions include:

- create separate python script for each target and use crontab to schedule all at the same time

- however, any changes in the codebase’s naming schemes, etc, could make upkeeping each script a nightmare

- ultimately want one script

- create single python script that takes parameters from the commandline, and use crontab to schedule all at the same time

- this is a much better solution than the first

- however, I’m dealing with 30+ targets and would ideally like to keep my crontab file clean, e.g., can this be done with one row?

- create single python script that takes parameters from the commandline, make a bash script and run multiple iterations of the python script simultaneously by forking (&), and use crontab to schedule bash script

- this is getting pretty good!

- learn how to use multithreading in python

Read More

Written on October 11, 2017

Maybe the IT department already set things up, but mounting a samba shared drive on my

MacBook Pro work computer was super simple.

Read More

Written on October 4, 2017

Yesterday,

I had issues getting the selenium python package installed on my corporate EC2 instance.

It turned out that the system’s default pip was not conda’s pip. After figuring this out,

and using conda’s pip to install selenium, everything was cool…

Read More

Written on October 3, 2017

I ran into this annoying issue before while trying to

install TensorFlow

on my work-provided g2.2xlarge.

Read More

Written on October 3, 2017

In a python program, I can use a try/except statement to avoid a crash. In the case of a would-be crash,

I can save some data locally and email myself about the failure. What if the program is supposed to log data every hour and

something outside the try/except statement fails? Though it may crash, we can minimize losses by having the

program run on a schedule using cron…

Read More

Written on October 3, 2017

So you’ve built a scraper. Great! But now what? Are you going to run it every hour manually?

Read More

Written on September 29, 2017

Let’s face it: my first post on the Data API is just brain spew and chicken scratch!

It’s certaintly been a helpful reference for me as I’ve further played with the Reporting

and Data APIs – but it’s time for an update!

Read More

Written on September 27, 2017

When using Jupyter Notebooks (or iPython/Jupyter console/Qt), I take advantage of the fact that you natively

use Unix/Linux commands.

Read More

Written on September 27, 2017

Read More

Written on September 26, 2017

Connecting to Redshift from R is easy:

con = dbConnect(drv, host=host_production,

port='5439',

dbname='dbName',

user='yourUserName',

password='yourPassword')

dbExecute(con,query_to_affect_something)

dbGetQuery(con,query_something_to_return_results)

Read More

Written on September 26, 2017

https://developers.google.com/oauthplayground

Read More

Written on September 24, 2017

Lots of talk about YouTube lately. What about the other places on the web a media company might host

their content and want to better understand?

Read More

Written on September 20, 2017

Reader Beware

For some motivation and preliminary reading, you might find my

original exploratory post

on YouTube’s Reporting API helpful. In this particular post, I just want to cover some additional

technical details that I’m more aware of after having covered

Google’s Client API and

YouTube’s Data API.

Read More

Written on September 19, 2017

https://developers.google.com/youtube/v3/

Read More

Written on September 18, 2017

Previously, I wrote about how to use the YouTube Reporting API from Python. My hope

was that by dissecting and restructuring the provided code snippets into a more procedural

format, it would be easier for a newcomer to get a sense of what each line of code is

doing… Or at the least, allow them to use the code in an interactive python session

to see what each line does (whether or not they gain any further sense of it).

Read More

Written on September 15, 2017

For most content, YouTube provides daily estimated metrics at a 2-3 day lag. If you are working on a project

that requires recency or metric estimates at a better-than-daily cadence, scraping is probably the way to go,

and will allow you to obtain estimates of total views, likes, dislikes, comments, and sometimes even a few other quantities.

Read More

Written on August 25, 2017

To run headless browser scripts, I’ve been using PhantomJS. But all along, all I could think was, “Would

be nice if Chrome just had its own headless version.” Then as the story usually goes, I finally googled this.

Read More

Written on August 24, 2017

Many platforms you want to extract data from will provide CSV or Excel files. Manual download is

easy, but doing it everyday is laborious. Furthermore, if you work for a media company, you might have 100’s

of Facebook Pages, YouTube Channels, and so on. At one point, an automated solution could be beneficial!

Read More

Written on August 23, 2017

Data doesn’t always come neatly packaged in a table or streaming through some API.

Oftentimes it’s just out there — free range flocks of it on the Wild, Wild Web, just waiting

for a cowboy to come by and herd it to the meat factory for slaughter.

Read More

Written on August 12, 2017

There are many services we use that provide data from their website… You

just have to sign in! Usually there is a dashboard, an Excel/CSV file, or

both. Scraping a dashboard is incredibly specific to the platform you are

interested in, depending on how that dashboard is coded. Scraping a file is

something I need to figure out and will cover in a future post.

Read More

Written on August 11, 2017

Yesterday, I covered how to scrape videos from a YouTube playlist. Today, imagine you are interested in

a channel that has multiple playlists, and you want to scrape ‘em all!

Read More

Written on August 10, 2017

Let’s say you want to monitor your YouTube Playlist on the daily or hourly: keep track of which videos

are getting viewed, liked, and so on. One way to do this is to

employ some Selenium – all you’ll need it the playlist’s list ID.

Read More

Written on August 8, 2017

Read More

Written on August 8, 2017

I use this package all the time, but only figure it out as I go along…and I often forget what I did the last

time. Here are a few refresher commands:

```python

import requests

import bs4

Read More

Written on August 3, 2017

The data we explore today (whatever day it is for you) is the mushroom data

found at the UC Irvine Machine Learning Repository.

Read More

Written on July 31, 2017

If you haven’t heard: accuracy is garbage.

Read More

Written on July 28, 2017

Collection of notes on how to call R from Python, with a focus on how to use

R’s {dplyr} package in Python for munging around.

Read More

Written on July 27, 2017

To be as efficient as possible, just take a look at this cheet sheat to my cheat sheet:

Read More

Written on July 23, 2017

“The first scrape is the hardest, baby, I know.”

– Cheryl Crow in an Alternate Universe

Read More

Written on July 22, 2017

Udacity’s Deep Learning nanodegree was such a great experience, and I learned

so much about Python, TensorFlow, Keras, and – of course – neural networks.

Read More

Written on July 21, 2017

It could happen to any of us: you SSH into your EC2 and, “Wtf?” Nothing is working right.

Python doesn’t recognize libraries you’ve always used. You can’t source activate into another Conda environment.

“What is going on?!”

Read More

Written on July 20, 2017

Find the code and notes in my DLND repo:

Read More

Written on July 14, 2017

In RStudio, I use the “Material” color theme, which has a midnight blue background similar to the popular “solarized” theme.

In my never-ending quest to transform my Terminal/Vim/iPython set up into something more like RStudio, I wanted to figure out

how to do this in Vim.

Read More

Written on July 14, 2017

So you’re trying to install XGBoost?

Read More

Written on July 13, 2017

Here is a quick 1-2 about BeautifulSoup:

Read More

Written on July 5, 2017

Find the code and notes in my DLND repo:

Read More

Written on June 29, 2017

In my previous post, I was trying to figure out how to use Tmux to integrate a remote

vim and iPython session, while displaying images locally. For example, I wondered,

“Is it possible to create a TMux session that allows one to place QTConsole side-by-side with Vim, Bash, etc?”

Read More

Written on June 26, 2017

An autoencoder is designed to reconstruct its input. In a sense, a perfect autoencoder

would learn the identity function perfectly. However, this is actually undesirable

in that it indicates extreme overfitting to the training data set. That is, though

the autoencoder might learn to represent a faithful identity function on the training set,

it will fail to act like the identity function on new data — especially if that new

data looks different than the training data. Thus, autoencoders are not typically

used to learn the identity function perfectly, but to learn useful

representations

of the input data. In fact, learning the identity function is actively resisted

using some form of regularization or constraint. This ensures that the learned

representation of the data is useful — that it has learned the salient features

of the input data and can generalize to new, unforeseen data.

Read More

Written on June 21, 2017

TMux is finally useful to me.

Read More

Written on June 21, 2017

All I have to say is, if all your data is in English, then you haven’t yet lived! Don’t you want to live?!

Read More

Written on June 15, 2017

Better know your history, right? Not if you’re Markovian. In Markovia,

everyone is blackout drunk and stumbling around. Nobody remembers anything.

History doesn’t matter.

Read More

Written on June 9, 2017

Is there a custom function you always use that is too specific to really create a library around, but that you

use so frequently that doing something like source(path_to_file) gets annoying? For me, its one I call rsConnect(),

which allows me to connect to Amazon Redshift without having to remember the specifics every single time.

Read More

Written on May 25, 2017

Find the code and notes in my DLND repo:

Read More

Written on May 22, 2017

Read More

Written on May 12, 2017

Need to reduce the dimensionality of your data? Principal Component Analysis (PCA) is often spouted as a go-to tool. No doubt, the procedure will reduce the dimensionality of your feature space, but have you inadvertently thrown out anything of value?

Read More

Written on May 11, 2017

As a kid, I loved to play Toe Jam & Earl with my brother. The game’s music was epic and inspirational, at least as far as

we were concerned. Later on, in the future–now the past!–we would learn some musical instruments and jam out funk-rock

style improvs largely inspired by TJ & E sounds.

Read More

Written on May 11, 2017

To use a Jupyter Notebook or TensorBoard on AWS is straightforward on a personal account.

It’s a little trickier if you are using a work account with restricted permissions.

In this post, I will lay out my experience with both scenarios.

Read More

Written on April 20, 2017

At one point, long ago, I was using MatLab for this project, IDL for that project, and R for yet another. For each language, I

used a separate IDE, and this introduced a productivity bottleneck.

Read More

Written on April 11, 2017

The responsibilities of my job and the projects I work on can vary from one day to the next. Turns out that a clever solution to a problem isn’t something I necessarily remember 100% when confronted with the same issue several weeks or months down the line.

Read More

Written on April 4, 2017

Find the code and notes in my DLND repo:

Read More

Written on March 20, 2017

Linear regression is a go-to example of supervised machine learning. Interestingly, as with

many other types of well-known data analysis techniques, a linear regression model can be

represented as a neural network: using known input and output data, the goal is to find the

weights and bias that best represent the outputs/response as a linear function of the

inputs/predictors. The neural network representation seamlessly integrates simple (one predictor),

multiple (more than one predictor), and multivariate (more than one response variable)

regression into one visual:

Read More

Written on March 17, 2017

Singular spectrum analysis (SSA)……

Read More

Written on March 11, 2017

I’ve spent nearly a decade honing my .bash_profile, .vimrc, and other

startup files on my personal laptop. But can’t say that I think about them too much.

It’s set-and-forget for long periods of time. To be clear, taking things for granted goes far beyond

startup files: XCode, HomeBrew and hordes of brew-installed software,

R and Python libraries, general preferences and customizations all around!

Read More

Written on February 26, 2017

Want to host your blog on GitHub? I got up and running by following this

how-to guide

from SmashingMagazine.

Read More

Written on February 11, 2017

Just the basics on this one.

Read More

Written on February 4, 2017

In Udacity’s Deep Learning nanodegree, we will be developing deep learning algorithms

in Jupyter Notebooks, which promotes literate programming. These Notebooks are a great way

to develop how-to’s and present the flow of one’s data science logic. They are similar to

notebooks in RStudio, but run in the browser and have a “finished feel” by default (whereas,

with R notebooks, you have to compile it to get the finished look).

Read More

Written on February 4, 2017

We will be using Python in the Deep Learning Nanodegree, specifically the Anaconda

distribution. Conda is a package management and virtual environment tool for creating

and managing various Python environments.

Read More

Written on January 18, 2017

While working with some tables in Redshift, I was getting frustrated: “I wish I could just

drag all this into R on my laptop, but I probably don’t have enough memory!” A devlish grin: “Or do I?”

Read More

Written on December 22, 2016

At work, a lot of people use DBVisualizer on Windows computers… This was ok to get up and running, e.g.,

learning about the various schemas and tables we have in Redshift. But at one point, its utility is lacking:

you can’t really do anything with the data without writing it to a CSV file and picking it up in R or Python. So why

not cut out the middle man and just query data from R or Python?

Read More

Written on August 24, 2016

Mostly notes from StanfordOnline’s “Introduction to Databases,” but also notes from Googling things.

Read More

Written on August 16, 2016

When choosing a database, there is MongoDB, Cassandra, MariaDB, PostgreSQL, and on and on. One question that plagues my mind

when reading about all these possibilities is: How do you know which one to use? What use cases is a particular DB

optimized for? Which ones is it terrible for?

Read More

Written on October 26, 2015

It just so happens that I’ve fattened up quite a bit over the past month.

Read More

Written on October 14, 2015

Did you know that a correlation is similar to an inner product between two data sets?

Read More

Written on October 14, 2015

Read More

Written on October 10, 2015

It is rare that a scientist works in isolation, despite the existence of single-author papers. The problem is there is only one label: author. And this fudges with everyone’s heads.

Read More

Written on September 30, 2015

Read More

Written on September 21, 2015

Read More

Written on September 3, 2015

In a spatially 3D universe, one encounters two major types of waves: transverse and longitudinal. The waves are categorized into these two groups in response to the question: Does the wave vary parallel or perpendicular to its direction of propagation?

Read More

Written on September 3, 2015

“I ain’t never goin’ back to school!”

Read More

Written on September 2, 2015

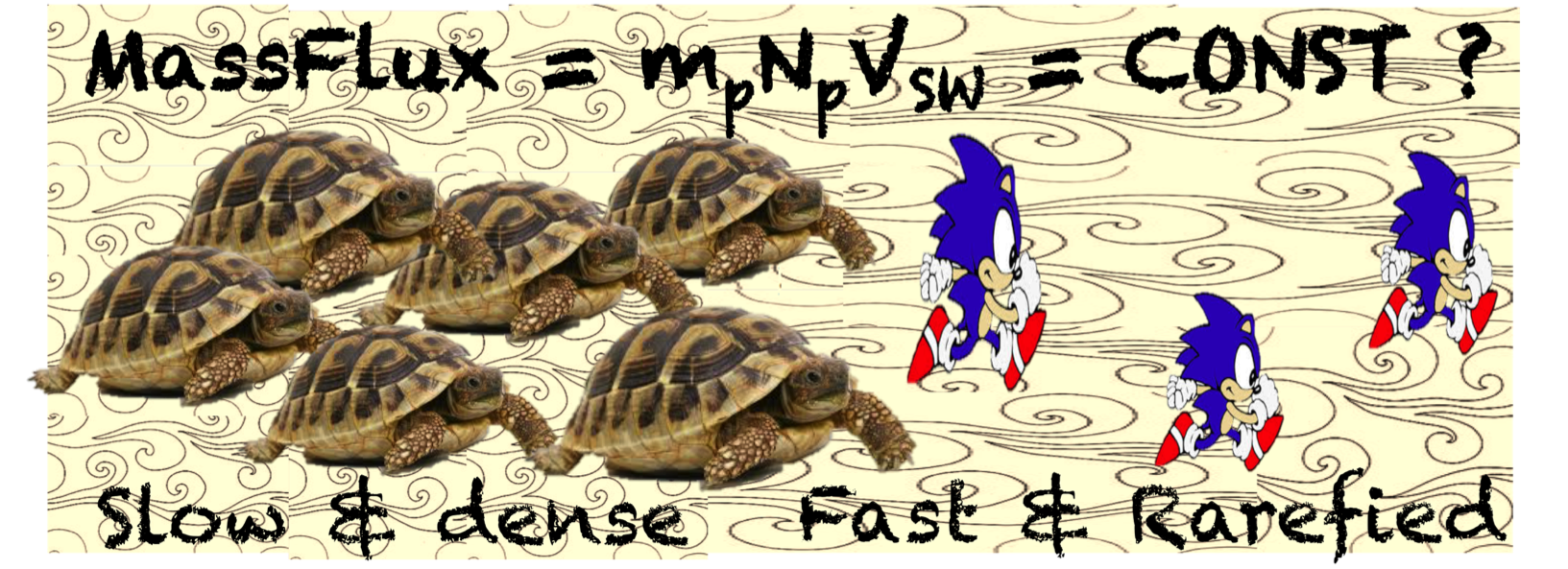

The solar wind is constantly crashing into the earth, alternating between times of turbulent cacophony and moments of serenity.

Read More

Written on August 11, 2015

There’s no way around it: you’re not going to find the meaning of life in this blog post.

Read More

Written on February 1, 2015

Read More

Written on November 25, 2014

Read More

Written on November 20, 2014

Let’s say there exists a server with a few years worth of daily riometer images. And suppose

we want to download all of ‘em and make some time-lapse-like movies for each year of data. First,

how do we quickly download 1000s of images? Secondly, how do we glue ‘em all together into a movie?

Read More

Written on February 11, 2014

Read More

Written on January 30, 2014

Read More

Written on January 7, 2014

Read More

Written on March 20, 2013

Read More

Written on March 1, 2010

Read More

Written on February 21, 2010

Read More

Written on February 14, 2010

Read More

Written on February 7, 2010

Read More

Written on January 31, 2010

Read More